The Ripple Effect

-News and Commentary-

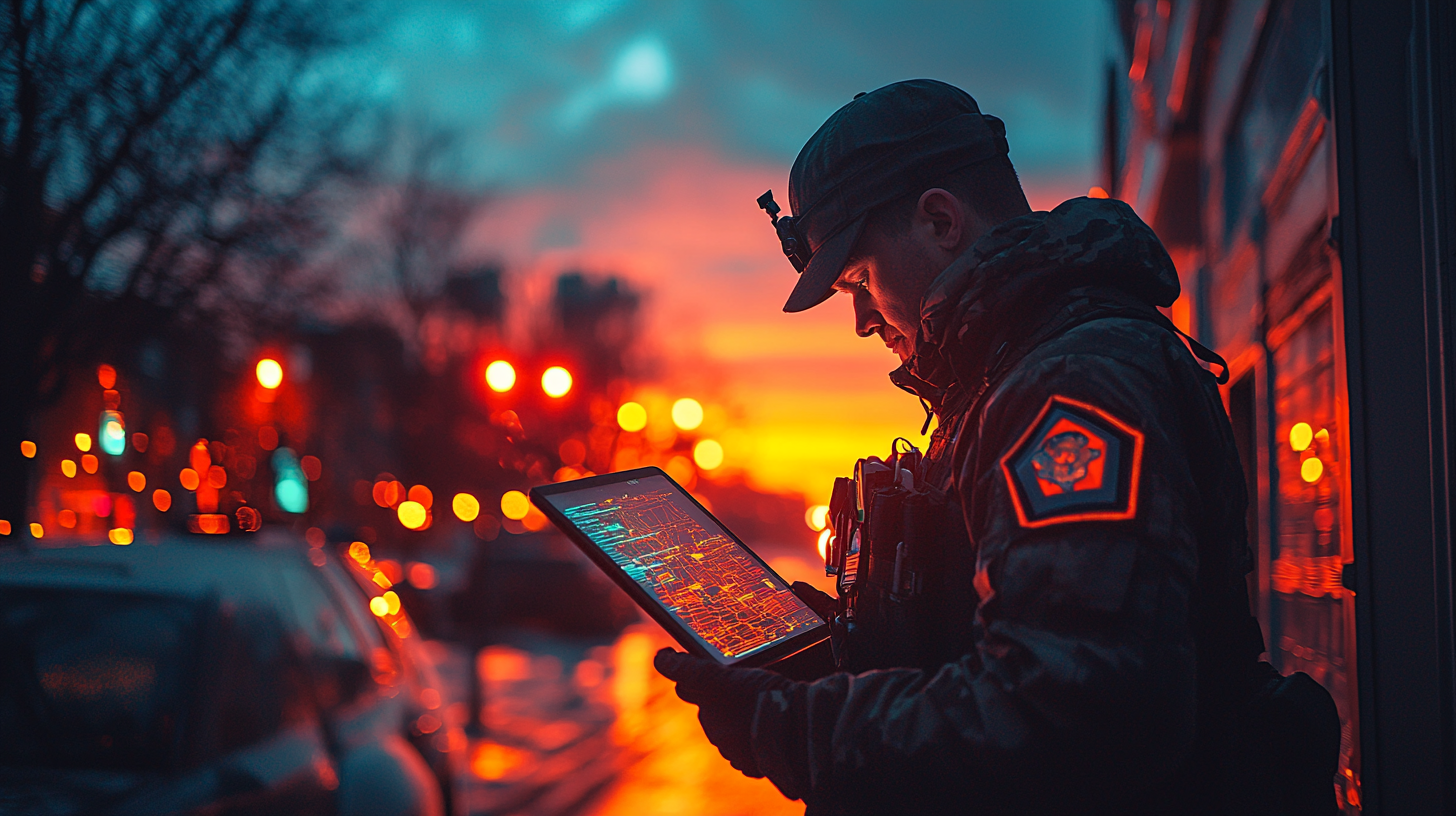

AI Police Surveillance Expands in Major U.S. Cities

- Home

- News and Commentary

- AI Police Surveillance Expands in Major U.S. Cities

Share On Social

You might not see it, but it sees you.

Across major U.S. cities like New York, Chicago, Atlanta, and Los Angeles, law enforcement agencies are rapidly expanding the use of artificial intelligence in day-to-day policing. We’re not just talking traffic cams or bodycams — we’re talking facial recognition systems tied to vast databases, license plate readers mounted to cruisers scanning thousands of tags per shift, predictive crime software mapping entire neighborhoods based on past behavior, and real-time monitoring of social media posts for “threat signals.”

All of it powered by AI. All of it backed by millions in government contracts. All of it sold under the banner of “public safety.”

But here’s the question: Safe for who?

This expansion isn’t happening quietly. It’s happening quickly. It’s being layered on top of already over-policed Black and Brown communities. And the same technology that’s hailed as “smart policing” is also raising serious red flags for civil rights, privacy, and systemic bias.

We’ve seen this movie before — just not with this much code.

The post-9/11 security state laid the foundation. Tech companies, hungry for data and contracts, provided the tools. AI simply connected the dots — faster, more efficiently, and often without any real oversight. In a post-George Floyd America, some police departments promised reform. Instead, they’re adopting smarter surveillance with fewer questions asked. What was once a human decision is now run through an algorithm. And those algorithms? They’re built on the same historical data that already reflects bias.

What makes this even more alarming is the lack of transparency. Most of the time, you don’t know if you’re being watched, flagged, or tracked. You don’t know what list you’re on. You don’t even know who built the AI or how it decides what it sees as “suspicious.”

It’s surveillance without conversation.

And cities aren’t just rolling this out quietly — they’re scaling it, funding it, and in some cases, using it without even telling the public. From the NYPD’s use of Digidog robotic units and facial recognition to LAPD’s data-driven “Community Safety Partnership,” what used to be science fiction is now standard issue.

That’s where the ripple begins.

Because once surveillance gets embedded in a city’s infrastructure — once it becomes part of how you move, speak, protest, and even just walk down the street — you can’t just roll it back. You have to fight to even know it’s there.

And most people don’t even know where to begin.

The rapid growth of AI in policing isn’t just tech innovation

t’s the result of a deep partnership between local police departments, federal funding sources, and private tech corporations. Major players like Amazon’s Ring, Palantir, Clearview AI, and Axon are now embedded in city policing infrastructure. In New York, the NYPD has over 15,000 surveillance cameras across the five boroughs, many enhanced with facial recognition. Chicago PD deploys ShotSpotter, a gunshot detection system tied into real-time response networks. In Atlanta, AI-powered license plate readers scan cars and instantly flag individuals with warrants. Smaller cities are joining the wave too, often implementing systems quietly, with little public disclosure or understanding.

What’s actually happening on the ground is more than just surveillance — it’s data-driven law enforcement with automated assumptions. Facial recognition scans are being run on crowds at events, protests, and even traffic stops. Predictive policing algorithms, sold as proactive, often replicate decades-old racial patterns by flagging certain neighborhoods repeatedly as high-risk zones. Police are also monitoring social media platforms in real time, using AI to create behavioral profiles from posts, emojis, and language patterns. Body cameras, which once promised accountability, are now being paired with AI that can “flag” behavior — essentially teaching a machine to decide what looks suspicious.

This isn’t a new story, but it’s a rapidly evolving one. After 9/11, the government justified mass surveillance under national security. In the years since, that same infrastructure has shifted inward, becoming a domestic tool for monitoring citizens, particularly in poor, minority communities. Starting around 2010, local law enforcement began receiving federal grants and private contracts to upgrade their surveillance capabilities. By 2020, the push to “modernize policing” became a priority — not just with weapons, but with data. What was once a pilot program or beta test is now active in major metropolitan areas with little oversight, and even less conversation.

What makes this so dangerous is the illusion that technology removes bias. In truth, AI doesn’t erase injustice — it automates it. These systems are trained on historical policing data, and that data is soaked in decades of over-policing, racial profiling, and discriminatory enforcement. So when an algorithm flags someone as suspicious, it’s usually echoing patterns built on injustice. Without new data and new intent, these “smart” systems simply reinforce old problems at scale — and much faster.

The more cities adopt AI policing tools without community oversight or ethical guardrails, the harder it becomes to pull back. Once these tools are installed and normalized, they become invisible — part of the landscape. And when the systems fail or harm someone, it’s nearly impossible to challenge them, because the decision wasn’t made by a human officer — it was made by a black box algorithm.

This is why activists, civil rights lawyers, and tech ethicists are raising the alarm. We’re not just talking about cameras or software. We’re talking about a full-blown infrastructure of surveillance that tracks movements, analyzes faces, interprets language, and predicts behavior — often without the subject ever knowing it happened.

Code can’t fix culture. AI can’t fix accountability. If anything, it’s starting to shield it. The real danger here isn’t just the technology — it’s how easily we’re accepting it.

AI surveillance feels futuristic, but the logic behind it is old: control the “dangerous” parts of society through power, not partnership. This isn’t about hating innovation — it’s about knowing when innovation is being used against us. Without transparency, regulation, or informed consent, this isn’t a tool for safety — it’s a blueprint for silent authoritarianism.

And the scariest part? Most people won’t even notice until it’s too late.

Would you know if your city was using AI to track your movements?

Who’s deciding what “suspicious behavior” looks like in an AI database?

Should we treat algorithmic policing like a public utility — regulated and transparent?

What happens when a system flags someone for something they might do?

"Truth survives because of you. Make your gift count."

Every dollar helps us fight misinformation and dig deeper into stories that matter.

and then

and then